Superposition sounds like physics class, yet in neural networks, it points to a simple idea. A model often stores many features in the same group of neurons instead of giving each feature its own spot. One unit lights up for a curve in an image, a hint of sarcasm in a sentence, and a texture on a surface, depending on what else is present.

People call such units polysemantic neurons. Think of a small studio apartment with furniture that does double duty. The couch is also a bed. The table is also a desk. The space is used well, yet it is hard to assign one clean label to any item. That is the heart of superposition and the reason tidy, single sentence explanations do not stick.

Training pushes weights to reduce error on many patterns at once. When the number of useful features exceeds the easy capacity of a layer, the model starts sharing directions in activation space. Even when a network has many parameters, regularizers, and sparsity pressure still reward reuse.

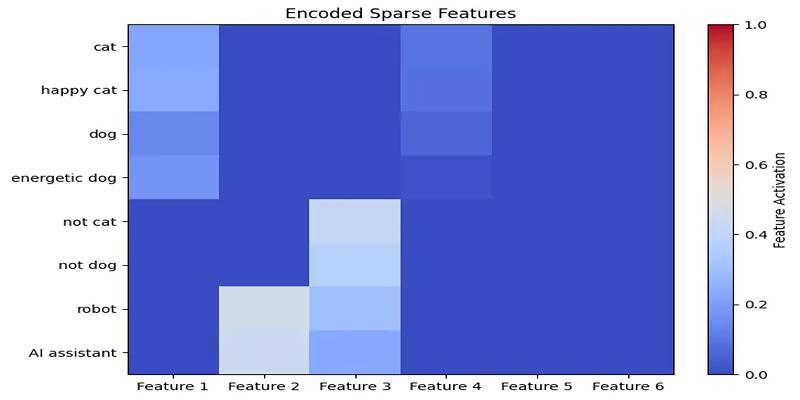

If two features rarely appear together, the optimizer learns to place them in similar directions. The system then relies on context to separate them. It is a packing trick that saves space and keeps accuracy high.

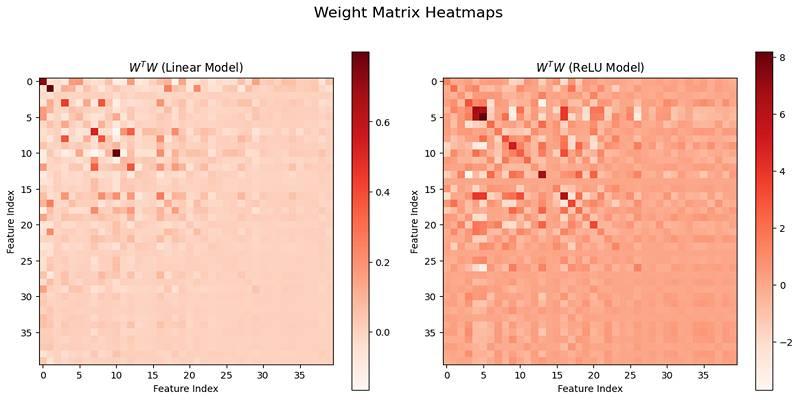

Inside a layer, activations form a high dimensional cloud. Each feature is not a clean axis. It is a direction at some angle to others. If directions were orthogonal, we could say this neuron means stripes, that neuron means red.

Real layers show angles and overlaps. A strong activation often reflects a blend of several features at once. Which feature matters most depends on what other signals arrive at the same moment. That conditional meaning frustrates simple heat maps and single neuron stories, because the story shifts with context.

A closet that is packed teaches the same lesson. One hanger holds a jacket and a scarf. If you peek and spot the scarf, you might guess that the hanger is for scarves only. Pull it out, and the jacket swings into view. Many neurons behave like that.

With a narrow set of inputs, they look single-purpose. With a wide set, they reveal that they carry two or three roles that rarely clash. This is not a mess by accident. It is a learned strategy to stretch capacity.

Shared directions can collide. A tweak that helps one feature can hurt another if they overlap too much. During training, the gradients pull in different directions, and the network settles into a trade off that works across data.

That is why a neuron can appear to switch meaning as training progresses or as the dataset shifts. The unit never had one meaning to begin with. It was part of a shared subspace whose role changes with the neighborhood around it.

Linear probes, saliency maps, and neat top-k visualizations give quick answers. They also tend to assume clean separation. In a superposed space, a probe may latch onto a neuron because its activity correlates with a feature on your sample, not because it owns that feature. Change the sample and the interpretation moves.

Attributions spread importance across many mixed directions, and then heuristics try to simplify the picture. That simplification reads well yet can be brittle. The safer habit is to test interpretations against held out data and against small, targeted edits to the inputs to see if the story holds.

Some layers look sparse, where only a few units fire. Others look dense, where many twitch a little. Superposition thrives in both. In a sparse code, the few active units can still carry mixed meanings split by sign or magnitude. In a dense code, the next layer recombines many small signals to tease out the feature you care about.

Either way, the mapping from one unit to one tidy concept rarely fits. Attempts to force sparsity with special losses may help readability, yet the packing habit remains whenever many features compete for limited attention.

People like circuit diagrams that show clean paths from a pattern to a decision. Circuits exist, yet they are often braided. A single connection helps two different subcircuits that do not fire together often. When inputs shift, the same path can carry a different meaning.

This reuse explains why pruning can drop a good share of weights with only a small hit, and why weight sharing tricks keep working. It also explains why static diagrams that bind one job to each neuron can give false confidence.

Better stories come from methods that respect mixed spaces. Study directions instead of single units. Use sparse autoencoders to unpack blends into more atomic features while holding the model fixed.

Track not only the presence of a feature but also the effect of removing a direction across many examples. These steps do not hand out perfect answers, yet they produce explanations that travel better from one dataset to another.

People need mental models that fit in their heads. Superposition does not cancel plain language. It asks for honesty about trade-offs. Say that features live across many neurons and share space. Say that context picks which meaning shows up.

Avoid claiming that a neuron equals a named concept. Frame it as a chorus where several voices sing louder or softer depending on the song. That picture is humble and still useful when you are debugging, auditing, or teaching.

Superposition is the habit of packing several meanings into the same neural space. It grows from training pressure, sparsity, and the geometry of activations where directions overlap. The benefit is efficient use of capacity. The cost is that single neuron definitions break. Signals collide, roles shift with context, and quick probes can misread blends as pure concepts. Clear thinking comes from studying directions, interactions, and the effect of small, targeted changes, not from pinning a fixed label on one unit.

Failures often occur without visible warning. Confidence can mask instability.

We’ve learned that speed is not judgment. Explore the technical and philosophical reasons why human discernment remains the irreplaceable final layer in any critical decision-making pipeline.

Understand AI vs Human Intelligence with clear examples, strengths, and how human reasoning still plays a central role

Writing proficiency is accelerated by personalized, instant feedback. This article details how advanced computational systems act as a tireless writing mentor.

Mastercard fights back fraud with artificial intelligence, using real-time AI fraud detection to secure global transactions

AI code hallucinations can lead to hidden security risks in development workflows and software deployments

Small language models are gaining ground as researchers prioritize performance, speed, and efficient AI models

How generative AI is transforming the music industry, offering groundbreaking tools and opportunities for artists, producers, and fans alike.

Exploring the rise of advanced robotics and intelligent automation, showcasing how dexterous machines are transforming industries and shaping the future.

What a smart home is, how it works, and how home automation simplifies daily living with connected technology

Bridge the gap between engineers and analysts using shared language, strong data contracts, and simple weekly routines.

Optimize your organization's success by effectively implementing AI with proper planning, data accuracy, and clear objectives.