Large language models have dominated headlines for the past couple of years, but the tide is shifting. Researchers across academia and industry are turning their attention to smaller, more efficient models. The reasons are varied—technical, economic, and practical—but the trend is gaining momentum fast. While massive models like GPT-4 and Gemini are still pushing benchmarks, there's a growing recognition that smaller models often get the job done at a fraction of the cost. In edge applications, enterprise deployments, and tightly scoped tools, small language models are not only viable, but they're also becoming preferable.

Scaling up language models comes with high computational costs. Inference latency, energy consumption, and GPU demand rise steeply with model size. For real-time applications, this becomes a problem. A 7B parameter model might deliver similar utility as a 70B model for specific tasks, yet operate with far lower latency and hardware needs. This has changed how researchers think about trade-offs.

Open-source efforts like TinyLLaMA, Phi-2, and Mistral 7B are gaining traction partly because they can run on consumer-grade GPUs or even on-device in some scenarios. Teams no longer need racks of A100s to experiment, deploy, or test. The result is a more iterative, accessible development cycle, where developers can fine-tune and deploy quickly, without a sprawling infrastructure budget.

Even inference optimization strategies like quantization and LoRA (Low-Rank Adaptation) are seeing wider adoption as teams look to compress large models down to usable footprints. Models distilled from larger foundations are starting to outperform older giants, especially in domain-specific tasks. This reframing of the cost-performance ratio is shifting priorities across research labs and commercial teams alike.

Running a model in production is different from topping a leaderboard. Enterprises face constraints that benchmarks don’t reflect. A model that works well in a controlled test environment might falter under load, or fail compliance checks due to data residency or privacy constraints. Smaller models are easier to audit, monitor, and retrain. Their simpler architectures mean fewer edge cases and less fragility during inference.

Companies building customer support agents, content moderation systems, or internal tooling don’t always need bleeding-edge capabilities. What they need is reliability, speed, and manageable resource demands. This is especially true for firms with on-premise requirements or latency-sensitive workflows. A fine-tuned 3B model can respond in milliseconds, making it usable in places where a 70B model simply won’t fit the latency envelope.

Some firms are also discovering that smaller models are more interpretable. Engineers can trace outputs more easily, validate behavior, and debug inconsistencies. This is critical in regulated industries like finance and healthcare, where explainability isn’t a bonus—it’s mandatory.

General-purpose performance is not always the goal. A model that excels across 50 tasks might still struggle with the exact phrasing or data format a business needs. Smaller models are proving ideal for surgical fine-tuning. Because they’re faster to train, easier to overfit, and more responsive to task-specific objectives, teams can craft narrow, high-performing models without starting from scratch or investing in heavy infrastructure.

This doesn’t mean small models are inherently better. They often lack the emergent behaviors seen in larger architectures. But for tasks like document classification, form extraction, question answering over structured corpora, or writing canned responses, they’re often enough. What’s changed is that researchers are leaning into that “enough,” rather than chasing generalization as the only benchmark that matters.

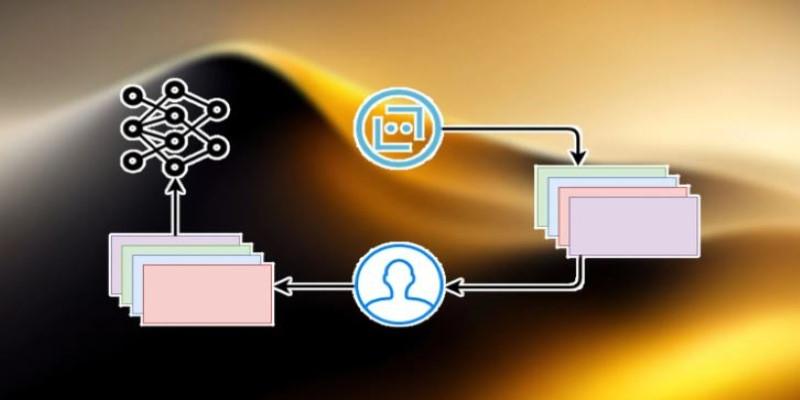

Instruction tuning, reinforcement learning with human feedback (RLHF), and retrieval-augmented generation (RAG) pipelines are now being adapted for small models. These methods, once reserved for larger architectures, are showing strong returns when applied to targeted use cases. The shift toward small models doesn’t mean giving up on sophistication; it just means applying it differently. In many settings, the additional precision outweighs the loss in scale.

Open weights and permissive licenses have accelerated experimentation. Developers can download, modify, and deploy small models without legal friction or API constraints. This makes them ideal for prototyping and rapid iteration. Models like Phi-2, LLaMA derivatives, and Mistral-7B have created a playground where researchers can run ablations, test modifications, and evaluate performance at their own pace.

Many of these models are trained on more curated datasets than their predecessors. Instead of scraping large swaths of the web, they rely on filtered corpora, synthetic data, and instruction-heavy formats that improve downstream performance. This is making smaller models more competitive in benchmarks that matter, like MMLU, GSM8K, and NaturalInstructions.

Open evaluation platforms are keeping this progress visible. Tools like EleutherAI’s evals, Hugging Face’s leaderboard, and lmsys.org’s comparison interface let developers test small models head-to-head with their larger counterparts. In some categories, the gap is closing faster than expected. In others, it’s no longer a gap but a choice—between generality and precision, between flexibility and control, between scale and stability.

Open models also foster community. From blog posts on quantization tricks to shared Hugging Face spaces that let users test models in-browser, there's a grassroots momentum driving the small model wave. It's technical, iterative, and informed by deployment feedback, not just abstract goals. Each contribution, whether from an individual or a team, adds practical knowledge.

Small language models aren’t a downgrade. They’re a recalibration. As organizations get more serious about deploying AI in real-world systems, the priorities shift. Cost, latency, interpretability, and control matter more than sheer size. Researchers are adapting, not out of constraint, but because the smaller models are proving to be better fits for many jobs. With open weights, efficient training methods, and community-backed tooling, the small model ecosystem is maturing quickly. This doesn’t mean the end of large models, but it does signal a more layered landscape—where size is just one variable among many, and not always the most important one.

Failures often occur without visible warning. Confidence can mask instability.

We’ve learned that speed is not judgment. Explore the technical and philosophical reasons why human discernment remains the irreplaceable final layer in any critical decision-making pipeline.

Understand AI vs Human Intelligence with clear examples, strengths, and how human reasoning still plays a central role

Writing proficiency is accelerated by personalized, instant feedback. This article details how advanced computational systems act as a tireless writing mentor.

Mastercard fights back fraud with artificial intelligence, using real-time AI fraud detection to secure global transactions

AI code hallucinations can lead to hidden security risks in development workflows and software deployments

Small language models are gaining ground as researchers prioritize performance, speed, and efficient AI models

How generative AI is transforming the music industry, offering groundbreaking tools and opportunities for artists, producers, and fans alike.

Exploring the rise of advanced robotics and intelligent automation, showcasing how dexterous machines are transforming industries and shaping the future.

What a smart home is, how it works, and how home automation simplifies daily living with connected technology

Bridge the gap between engineers and analysts using shared language, strong data contracts, and simple weekly routines.

Optimize your organization's success by effectively implementing AI with proper planning, data accuracy, and clear objectives.