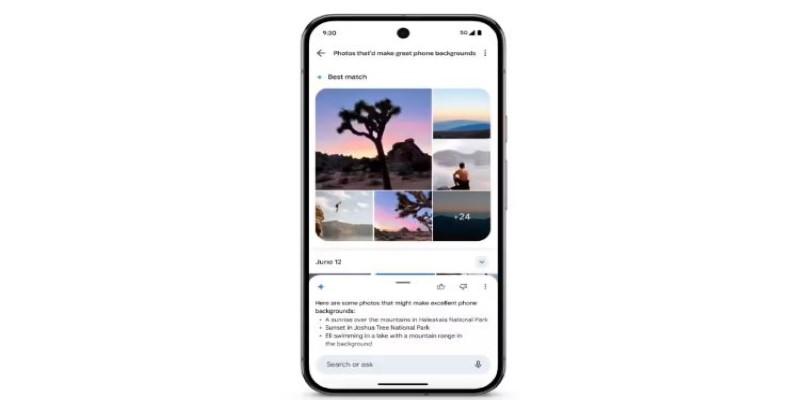

When Google first teased its new AI-powered “Ask Photos” tool for Google Photos, most people assumed it would be another overhyped product that struggled in real-world use. The idea sounded ambitious: ask your photo library questions in plain language—like “When did I last go hiking with Sarah?” or “Show me photos of my dog playing in the snow”—and get meaningful, accurate results. But skepticism was fair, especially since past attempts at AI search in Photos haven’t always understood context or details well. Now, though, this new feature seems to be working much better—and it's usable for everyday needs.

Ask Photos is built on Google’s Gemini AI system, which brings natural language understanding into Google Photos. Instead of relying solely on timestamps, face recognition, and location tags, the tool is now able to interpret phrasing and context the way people talk. This means users can type or speak requests the same way they would ask a friend, and the tool attempts to respond in a way that feels relevant.

In practice, if you ask, “When did we last celebrate Dad’s birthday at the beach?” the AI goes beyond scanning dates. It looks for images matching known faces, beach settings, past groupings of photos that align with “celebration” events, and even captions or objects like cakes or party hats. That’s a significant jump from simply recognizing people and places. And it’s not just answering with folders or albums—it gives direct results.

The feature is being rolled out gradually within the Google Photos app, and currently, it works for users who've opted into the Gemini-powered experiences. While the range of responses isn't infinite, it's surprisingly capable of understanding what a user might mean, not just what they typed.

Older versions of AI in Google Photos could identify objects and faces, but asking nuanced questions usually led to mixed results. For instance, searching for "that road trip in 2019 where we saw the mountains" would often return a scatter of mountain images from various times and places, without the context of the road trip. "Ask Photos" changes that by attempting to piece together the narrative behind the images.

The difference lies in the AI's ability to pull from multiple signals—visual elements, photo metadata, user history, patterns, and even Google’s broader language training. It doesn't just look for the word “road trip” in image labels; it figures out which cluster of photos might represent a road trip, especially one where certain people appear, mountains are visible, and the time frame matches 2019.

It also seems to have a growing memory. If you ask a question and then follow up with, “What about the one before that?”, it maintains the thread of conversation, something earlier iterations couldn't manage. This thread-based context is what makes it feel much more usable now, particularly when trying to surface memories across years of stored images.

People store thousands of photos in their libraries, most of which end up buried under more recent uploads. Manually digging through years of pictures is time-consuming, and the search has often felt clunky—useful only when you remember exact dates or specific labels. With Ask Photos, casual queries work.

Parents can ask for “first day of school photos of Mia” or “pictures of Liam at the lake house” and get pretty accurate results. The AI can differentiate between events like holidays, birthdays, and ordinary outings, even when they happen at the same place. It understands concepts like “our trip to Yosemite when it snowed” versus “our trip to Yosemite with the red cabin.” That’s context awareness, something AI image search has lacked.

It’s also helpful in making sense of photos that haven’t been organized. If you’re one of the millions of users who never labeled albums or added captions, you can still get value from this feature. The AI draws from faces, locations, objects, and even gestures or emotions (like someone blowing out candles or opening gifts) to identify patterns. It doesn’t need you to do the work first.

And while it’s not perfect, the margin for error is getting narrower. In testing, the feature handled complex queries decently—especially when people’s names and locations were involved. It did struggle with vague emotional moments like “when we were all laughing in the kitchen,” but even then, it surfaced close results rather than missing the mark entirely.

Ask Photos is part of a broader shift toward smarter, more intuitive tools that help people make sense of their digital history. As cloud libraries grow larger, features like this are less about tech novelty and more about usability. People want to recall specific memories without sorting through 12,000 photos manually. They want a tool that gets what they mean, not just what they typed.

The technology powering Ask Photos is still evolving, but it's now in a place where it feels like a real part of the user experience—not a beta feature or tech demo. It shows how AI can do more than just automate or organize—it can interpret.

As more people try it out, the feature will likely get smarter. The feedback loop is already helping fine-tune its ability to understand natural speech and connect it with visual memories. In the long term, it could transform how we interact with our photo libraries altogether—not by adding more filters or features, but by making them quietly more intelligent.

Ask Photos may have seemed like another flashy AI promise when it was first announced, but it’s quickly becoming something far more practical. It now performs well enough to help users dig through years of digital memories in a way that feels natural and useful. The technology still has its rough edges, but for the first time, Google’s AI photo search is more than a gimmick—it’s genuinely helpful. For anyone tired of endless scrolling and forgotten albums, this feature is starting to make digital photo libraries actually usable again.

Failures often occur without visible warning. Confidence can mask instability.

We’ve learned that speed is not judgment. Explore the technical and philosophical reasons why human discernment remains the irreplaceable final layer in any critical decision-making pipeline.

Understand AI vs Human Intelligence with clear examples, strengths, and how human reasoning still plays a central role

Writing proficiency is accelerated by personalized, instant feedback. This article details how advanced computational systems act as a tireless writing mentor.

Mastercard fights back fraud with artificial intelligence, using real-time AI fraud detection to secure global transactions

AI code hallucinations can lead to hidden security risks in development workflows and software deployments

Small language models are gaining ground as researchers prioritize performance, speed, and efficient AI models

How generative AI is transforming the music industry, offering groundbreaking tools and opportunities for artists, producers, and fans alike.

Exploring the rise of advanced robotics and intelligent automation, showcasing how dexterous machines are transforming industries and shaping the future.

What a smart home is, how it works, and how home automation simplifies daily living with connected technology

Bridge the gap between engineers and analysts using shared language, strong data contracts, and simple weekly routines.

Optimize your organization's success by effectively implementing AI with proper planning, data accuracy, and clear objectives.